I recently heard Jeff Bezos briefly talk about his views on LLMs here. Less than a minute into the conversation, he said something that struck a chord with me:

LLMs in their current form are not inventions, they are discoveries.

He followed that up with “we are constantly surprised by their capabilities and they are not really engineered objects“. That quote resonated because that we-don’t-know aspect has been standing out in my mind as I read more about LLMs and marvel at the concept of emergent behavior in software. Some outlets have characterized this innate-unknownness as frightening but I find it exciting and rather captivating because it points to the sheer potential of what (good) it may be capable of. It eerily parallels what Steven Johnson wrote about ants and cities in his 2002 book Emergence, describing how the system-level abilities and behaviors can differ from unit-level abilities and behaviors.

Since then, I’ve marveled every time I heard an extremely qualified speaker say something similar about LLMs. So much so that I started keeping a list:

- In a lecture on Future of AI by National Academy of Sciences, Prof. Melanie Mitchell points out (at 25:25 mark) the many internal layers of a transformer block that extracts meaning and says that “we don’t really know“, “even the people who made this system don’t know” how it’s updating the internal weights. “All we know is the training data that has been given“.

- In Stanford’s CS224N Lecture 10 at the instructor implies (at 19:55 mark) that we don’t know how the model is able to perform in zero/few shot scenarios without doing gradient updates. “...its an active area of research“, we are told. Perhaps the model is doing in-context gradient descent, as it’s encoding a prompt (!). Also at 22:32 mark, prompt engineering is mentioned as a “total, dark arcane art”.

- In Harvard’s CS50 Tech Talk at 7:35ish mark the ML researcher explains that as we give more and more data to the model for training it gets more capable and intelligent for “reasons we don’t understand“.

- In Lecture 9 of Stanford’s CS224N the instructor is explains how chain-of-thought prompting works “perhaps like a scratchpad for the model” since it generates a plausible sequence of steps to solve a problem. And that leads to much more frequent correct answers. At the end of that, around 1:13:40 mark the instructor says “no one is really sure why this works either“. His explanation made intuitive sense to me though: the scratchpad-like approach perhaps is allowing the user to implicitly put more computation into the generatioThe speaker gives an apt analogy

- In MIT’s 2023 GenAI Summit, the speaker gives an analogy (at 18:16 mark) that we can’t say we fully understand how the brain works just because we know about neurons. And “that’s the same level of understanding we have about these [Large Language] models...”

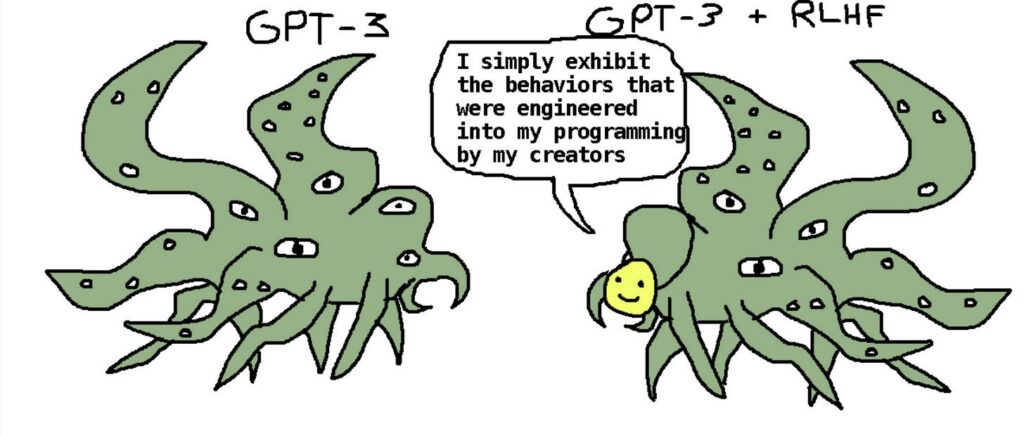

If you’ve come across instances of authoritative sources in AI field embrace the unknown about LLMs, please share. The shoggoth meme (pictured below) is already representing this fascinatingly unknown aspect of AI. Finding specific contexts in which it shows up is very interesting since it underscores how much is yet to be revealed in AI. After all, they are not really engineered objects.

[Image based on original post by twitter user @TetraSpaceWest]